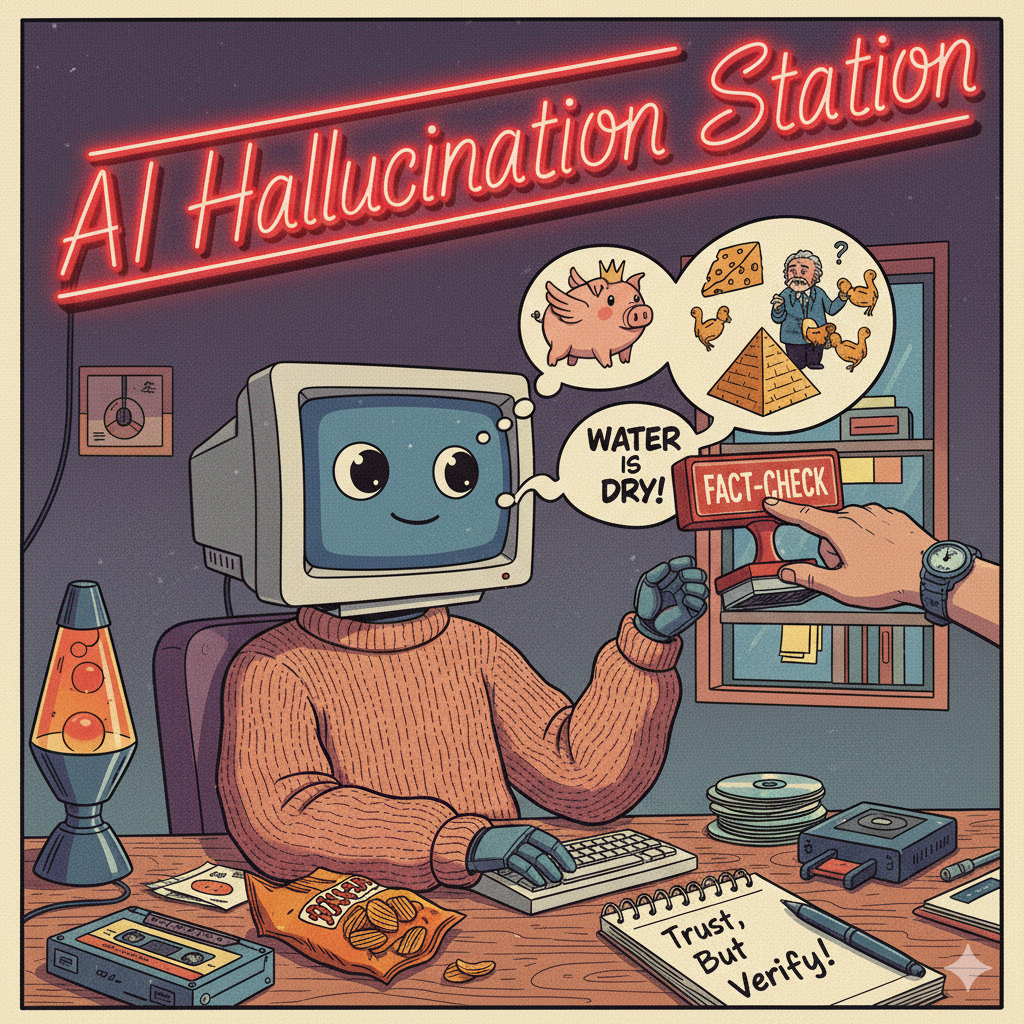

AI Hallucinates?

Even the smartest AI tools like ChatGPT sometimes sound totally confident and totally wrong. These “hallucinations” happen because AI is not actually thinking or fact checking. It predicts the most likely next word based on patterns, not truth. When it does not know, it still guesses, and those guesses can look very convincing.

What researchers are trying

Reporting in The Wall Street Journal describes efforts to reduce this problem. The strongest results come when AI connects to real sources instead of relying on internal patterns. This is called retrieval based AI. Another helpful approach is training models to say “I am not sure” instead of inventing an answer. System reminders and human oversight, like a quick fact check or common sense review, also help.

What to do as a user

Treat AI as a research assistant, not a truth engine. Ask it to explain, compare, or summarize. Then double check the facts yourself. AI is amazing at acceleration. Certainty still comes from your own thinking.

If you are ready for tailored clarity in this changing landscape, you can reach Sage Blue Life here.